Daisy-Chaining iOS Flaws

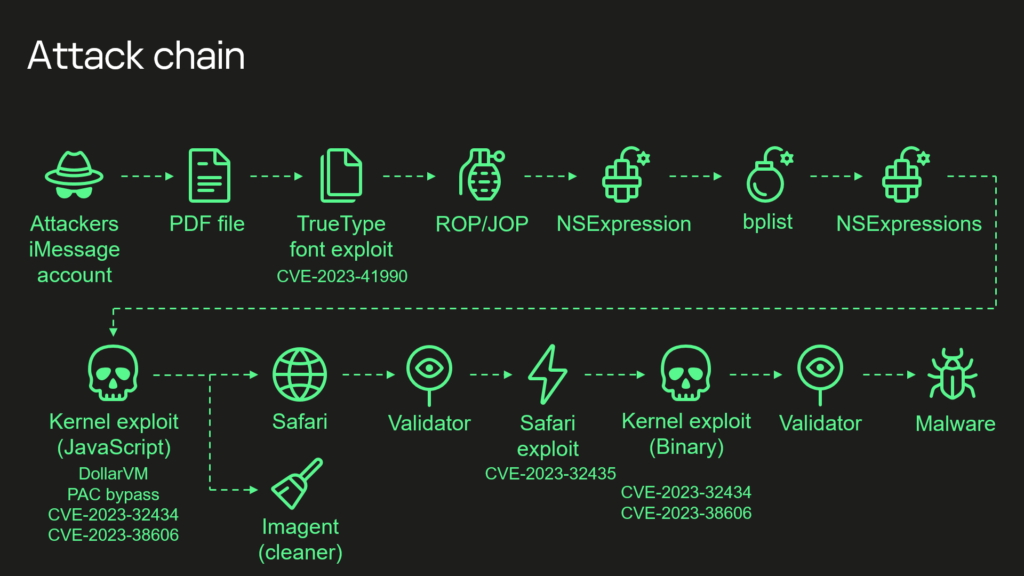

Kaspersky reported recently of some attacks targeting researchers at the company. Specifically, the attack was crafting a zero-click exploit of those researchers’ iPhones.

Of course if you poke a bear with a stick, you can expect a reaction. The Kaspersky researchers dug into what was happening to their devices and broke it down piece by piece.

It’s a fantastic read and is reported in detail here and here.

The researchers acquired forensics from the devices and were able to start tracking how the devices were being exploited. iMessage. Zero-click. Ouch!

Kaspersky had to also effectively spy on their own devices to try to grab the iMessages that were taking over the devices. Not only were they zero-click but they were also deleting the evidence, certainly within the UI, that a message ever existed.

Eventually, they were able to start breaking down the traffic from the devices from local proxying logs. They were finally able to attribute the attack to be based on an inbound payload of a .watchface file – something iOS would try to interpret and execute. Bingo!

It turns out that Apple Silicon, namely Bionic chips A12 through A16, had a feature that was not documented but was in a chain of exploits that existed to take ownership of the device.

Each version of these Bionic SoCs has a “secret backdoor” at a specific location that is then protected by an SBOX hash (basically a list of secrets to access that backdoor). The attackers somehow found out about this and then leveraged some other flaws to use it remotely.

Apple has layer upon layer of protection for iOS devices and the exploit managed to also bypass/negate the Page Protection Layer (PPL) – which is important to protect the RAM and its contents.

Introducing CVE-2023-38606 (a feature, not a bug?)

A vital part of this attack chain – and neutered from iOS 16.6 – is the flaw that Apple documents as being an “app may be able to modify sensitive kernel state.” It is, in basic terms, a door into the heart and mind of iOS and any Apple device running that code.

It is likely that this attack chain was being managed by a nation state threat actor or an entity with similar resources and similar goals. Being able to remotely take control of an iPhone without the user doing anything or knowing anything is something certain governments and institutions will always be looking for. And they doubtless will be more such exploits, such is life.

So what about this functionality, this design in the Apple SoC, that appears to have been an undocumented backdoor. Apple certainly knows about it because they designed it. What they did not expect, though, was that it would be abused remotely, chained in a row of flaws allowing a device to be taken over.

So what could this functionality exist for? It seems there are breadcrumbs that exist suggesting that the functionality could be for support and maintenance reasons.

- When you need support for a device, the Apple support people in the store appear to be able to connect to the device you bring in by changing a setting; they can then get serial number and other information from the device. The expectation is that this functionality depends on a local connection leveraging a functionality like this “flaw” to connect into customer devices from a blackbox-like environment (that they hoped would keep the secret safe).

- Devices in stock need some maintenance, especially when built and prepared with early full releases of iOS on them. Apple announced in 2023 that they would have a method by which they update the device in the box.

It seems functionalities likely intended to leverage such a feature/flaw were expected always to depend on the proximity to a device and either it not being initialised or it being unlocked and a toggle tweaked. Triangulation appears to have discovered this secret (which you would never expect to remain secret forever since it leverages Security by Obscurity) and then found a method to exploit it remotely and without a click.

While this exploit has been closed with iOS updates, there are some simple lessons to learn:

- Obscurity is not security, just a delaying technique

- While iOS is pretty secure, there is always the chance that a flaw exists in the extremely complex software stack – so update, regularly

- Rebooting will typically kill all malware on the device so if you’re worried, reboot often

- Typical targets for this malware are politicians and journalists – everyday people are frankly not interesting to the sophisticated hackers creating these exploits

- If you are a politician or journalist, Apple has a function in iOS called “Lockdown Mode.” It will make your iPhone less enjoyable to use but it will protect against most of the attacks; this attack, like most, relies on the device doing things for you to improve your user experience – so a reduction in that experience (convenience) affords you more protection

This is a game of Whac-a-Mole and new, similar flaws will always be sought out and possibly found. You could use a Nokia 8210 4G device for your messages and calls but any iPhone will be linked into an email address (to which iMessages can be sent) and then you are back to increasing your Security by Obscurity. Maybe that secret is easier for you to keep than a design created in a company and delivered in a supply chain…..